As artificial intelligence (AI) continues to reshape industries and drive innovation, the demand for high-performance computing in data centers has reached unprecedented levels. Managing the cooling and power requirements of a 50kW rack density AI data center presents a unique set of challenges. In this blog post, we will explore effective strategies and cutting-edge solutions to ensure optimal performance and efficiency in such a demanding environment.

Precision Cooling Systems

The heart of any high-density data center is its cooling system. For a 50kW rack density AI data center, precision cooling is non-negotiable. Invest in advanced cooling solutions such as in-row or overhead cooling units that can precisely target and remove heat generated by high-density servers. These systems offer greater control and efficiency compared to traditional perimeter cooling methods.

Liquid Cooling Technologies Liquid cooling has emerged as a game-changer for high-density computing environments. Immersive liquid cooling systems or direct-to-chip solutions can effectively dissipate heat generated by AI processors, allowing for higher power densities without compromising on reliability. Explore liquid cooling options to optimize temperature control in your data center.

Liquid cooling has emerged as a game-changer for high-density computing environments. Immersive liquid cooling systems or direct-to-chip solutions can effectively dissipate heat generated by AI processors, allowing for higher power densities without compromising on reliability. Explore liquid cooling options to optimize temperature control in your data center.

High-Efficiency Power Distribution

To meet the power demands of a 50kW rack density, efficient power distribution is paramount. Implementing high-voltage power distribution systems and exploring alternative power architectures, such as busway systems, can enhance energy efficiency and reduce power losses. This not only ensures reliability but also contributes to sustainability efforts.

Redundancy and Resilience

A high-density AI data center demands a robust power and cooling infrastructure with built-in redundancy. Incorporate N+1 or 2N redundancy models for both cooling and power systems to mitigate the impact of potential failures. Redundancy not only enhances reliability but also allows for maintenance without disrupting critical operations.

Dynamic Thermal Management

Utilize intelligent thermal management systems that adapt to the dynamic workload of AI applications. These systems can adjust cooling resources in real-time, ensuring that the infrastructure is optimized for varying loads. Dynamic thermal management contributes to energy efficiency by only using the necessary resources when and where they are needed.

Energy-Efficient Hardware

Opt for energy-efficient server hardware designed for high-density environments. AI-optimized processors often come with advanced power management features that can significantly reduce energy consumption. Choosing hardware that aligns with your data center's efficiency goals is a key factor in managing power and cooling requirements effectively.

Monitoring and Analytics

Implement comprehensive monitoring and analytics tools to gain insights into the performance of your AI data center. Real-time data on temperature, power consumption, and system health can help identify potential issues before they escalate. Proactive monitoring allows for predictive maintenance and ensures optimal conditions for your high-density racks.

Successfully cooling and powering a 50kW rack density AI data center requires a holistic and forward-thinking approach. By investing in precision cooling, liquid cooling technologies, high-efficiency power distribution, redundancy, dynamic thermal management, energy-efficient hardware, and robust monitoring tools, you can create a resilient and high-performing infrastructure. Embrace the technological advancements available in the market to not only meet the challenges posed by high-density AI computing, but to excel in this dynamic and transformative era of data center management.

Author's Note:

Not a bad blog post, right? I was tasked with writing a blog post on how to power and cool high density racks for AI applications. So, I had Chat GPT write my blog post in 15 seconds, saving me a ton of time and allowing me to enjoy watching my kid’s athletic events this weekend. As end users embrace AI technology, it is imperative that we understand how to support the hardware and software that enables us to achieve these time saving technologies. Over the past 6 months, about 20% of my time has been spent discussing how to support customer 35kW to 75kW rack densities.

Additionally, another key to understand, is the balance of AI and the end-user’s ability to recognize limitations and areas for improvement. AI taps into the database of information that is the Internet. Powerful, but it does so (at least currently) in a fashion that makes it appear to be two years behind. For example, this blog post was written to reflect a 35kW rack density, and subsequently, ChatGPT noted 35kW. However, today, I’m regularly working with racks supporting AI that average 50kW, and have seen go up to 75kW… and know that applications can hit upwards of 300kW per rack. So, please note, anywhere in the blog where it says 50kW, human intervention made these necessary edits to AI's outdated "35kW".

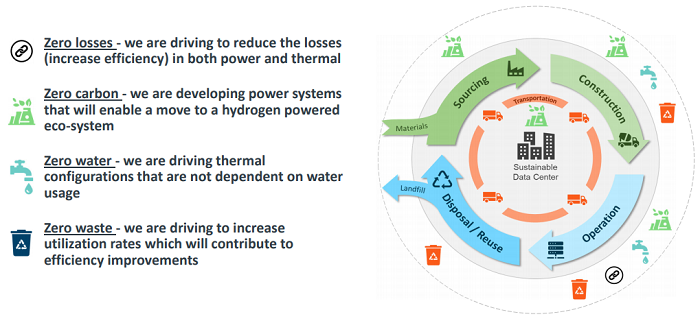

Also, just for reference, a 75kW application requires 21 tons of cooling for one IT rack! So, these new high-density technologies require the equivalent of one traditional perimeter CRAC to cool one AI IT Rack. DVL is here to help provide engineering and manufacturing support to design your Cooling, PLC Switchgear, Busway Distribution, Rack Power Distribution, Cloud Monitoring, and other critical infrastructure to support your efficient AI Technology.