By: Simon Brady

The latest buzz or trend being discussed at almost every IT and technology innovation meeting around the world is currently the Internet of Things (or IoT, as like everything else in the tech world it has been shortened to). We are rapidly moving forward from the original Internet as we know it, built by people for people, and we are connecting devices and machines, allowing them to intercommunicate in a vast network.

The Internet of Things, but what counts as a “thing”? Basically, we can fit almost any object you can think of in this category. You can attribute an IP address to nearly everything that exists within our universe, regardless if it’s a kitchen sink, a closet or an UPS. Everything can be connected to the Internet and can begin to send signals and data towards a server, as long as it has a digital sensor integrated.

Today, roughly 1% of “things” are connected to the Internet, but according to Gartner, Inc. (a technology research and advisory corporation), there will be nearly 26 billion devices on the Internet of Things by 2020.

At first, it might sound a bit scary, right? Hollywood movies and more recently even Professor Stephen Hawking tell us that it’s dangerous if machines talk to other machines and become self-aware. So should we be frightened or overly excited? There is no correct answer, because this new revolution and innovation in the field of technology is still not yet fully understood by people.

Back in history, all great inventions were first doubted and rejected by human kind. Remember how at the early stage of the Internet, some people considered it will be a great failure? Not many envisioned how it would change the world and eventually become an essential part of our lives.

Huge billion dollar companies, like Google, Microsoft, Samsung and Cisco, are investing a lot of money in developing IoT, and this could be the proof that the Internet of Things is here to stay and successful businesses will start building products and services compliant to IoT required functionalities.

So, how does it work? For normal people, interconnecting their own devices can lead to a better life quality and fewer concerns. For example, a smart watch or health monitor bracelet could be connected to a coffee maker, so that when you get out of bed, hot coffee is waiting for you in the kitchen. Temperature sensors in your house will manage heating in each room and even learn when you are home so your boiler is more efficient and you save energy. In making normal everyday life easier, IoT will include household items like parking sensors, washing machines or oven sensors, basically anything that has been connected and networked through a control device. Your fridge can know everything about your diet and your daily calorie intake and react accordingly, sending you updated grocery lists and recommended meal recipes. Already Samsung is building smart fridges to help you keep track of items, tell you when they are out of date and in the future automatically order you milk when you are running low.

But this is the micro-level we’re talking about. Let’s think about autonomous cars, smart cities and smart manufacturing tools. Bridges that can track every vehicle, monitor traffic flow and automatically open and close lanes to help traffic safety; cars that can talk to each other on highways, to help keep rush hour traffic moving and enhancing driver experiences; this is more than simply connecting machines or sensors, it’s using the data from all these connected devices in a way that can significantly improve life as we know it.

The key to the IoT is that all of the connected devices can send data in a very short timeframe, which is critical in many circumstances, but that’s not all. Instead of simply storing the data, it can also immediately analyse it and trigger an action, without requiring any human intervention.

Companies worldwide can greatly benefit from Internet of Things software applications, increasing their product’s efficiency and availability, whilst decreasing costs and negative environmental effects.

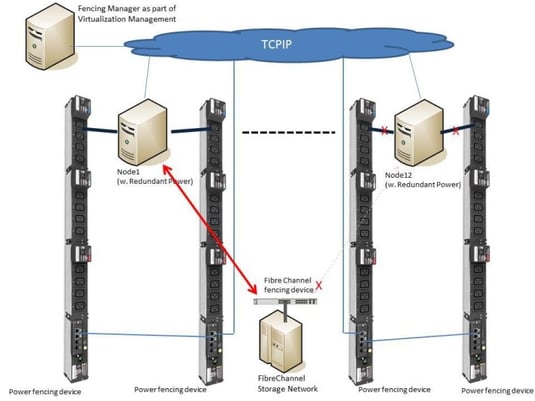

In a data center for example, by inter-connecting all active components, including UPS systems, chillers, cooling units, PDU’s, etc, a data center administrator can easily monitor and supervise their group activity. Control solutions like Liebert® iCOM are actually more than simple monitoring interfaces; they can coordinate all of the cooling systems and deliver the required air flow at the temperature needed on demand. When problems arise, alerts and notifications sent to the data center administrator are more than essential, in order to restore them to normal. But wait; shouldn’t this Internet of Things be something new? Liebert iCOM has been on the market for several years now. Let’s clear this up.

The term Internet of Things was first mentioned in 1999, by British visionary Kevin Ashton, but the actual process has been in development for a long time now. You see, the name can be a bit confusing, and indeed it just recently crawled into the mainstream media, so people think it’s something very new. But in fact, major companies have already been using and developing IoT for a couple of years now, changing perspectives on how things should really be done.

However, taking full-advantage of this great innovation in all life aspects is still in its early phase. The greatest challenges that IoT faces in this moment are high costs and security threats. For the time being, IoT solutions can be really expensive, so we’re dealing with an ongoing process of lowering costs, to allow more and more people and businesses to adopt it.

Also, the security breaches can be a reason to be concerned, since IoT is very vulnerable at this point; many hackers have manifested their overwhelming interest in this direction, so developers need to be extremely cautious when it comes to security protocols.

All things considered, we can conclude that the Internet of Things is our huge opportunity to create a better life for everybody, to build a strong foundation in the technology field and develop products and solutions that could actually change the world.

For More Emerson Network Power Blogs, CLICK HERE